James Russell, RN-BC, MBA, CVAHP, Value Analysis Program Director, UW Health, WI

In our busy, healthcare focused careers, buzzwords, buzz-phrases, and acronyms seem to come and go: Thinking out of the box; TQM (Total Quality Management); PDCA (Plan, Do, Check, Act); DMAIC (Define, Measure, Analyze, Improve, and Control); Six-Sigma/LEAN; Shared Governance; and many, many more. The latest irrefutable trump card appears to be the word evidence. As in evidence-based practice (EBP); evidence-based medicine; evidence-based nursing; etc. Although this mantra is not really a new thing, according to Mackey and Bassendowski in The Journal of Professional Nursing, EBP can be traced all the way back to Florence Nightingale[1]. None of us can remember back that far (although, you have to be really old to remember TQM!), but we can certainly see the application of trial and error involving healthcare practices throughout history, and remembering what works and what doesn’t isn’t truly a recent concept.

Assessment

All evidence is not created equal.

All evidence is not created equal.

In our world of Healthcare Value Analysis, evidence has become the secret password to get new products, services, and equipment adopted by clinical leadership. A savvy manufacturer’s sales representative will include the word evidence as often as she can, when trying to entice clinical experts to buy their drug, machine, or widget. It really doesn’t even matter what they’re selling. I have honestly had three different vendor representatives from three different companies use the same pseudo-evidence citations to support three extremely different products for inclusion at our facility!

All hospitals have similarities in their challenges. None of us want HACs (hospital acquired conditions) like nosocomial infections, pressure ulcers, DVT/PEs, etc., to be present in our facilities. Anything we can do to decrease these terrible patient occurrences is something we’re interested in. However, vendor representatives know this! One of these “never-events” would certainly be CLABSIs (central line-associated blood stream infections). In fact, the Centers for Disease Control (CDC) reports that thousands of deaths annually are caused by these terrible unintended consequences of being a patient in a hospital.[2] Who wouldn’t want to help prevent thousands of deaths? I have had a central line manufacturer’s representative refer to a clinical study as evidence for her antibiotic-coated central line in reducing CLABSIs, and then a different representative refer to the same study as evidence for his specialty coated central line dressing to reduce our CLABSI rates, and finally a third representative tell me we could really cut down on our CLABSIs if only we’d lather our patients from head to toe with his CHG (Chlorhexidine) containing products…again, using the exact same “evidence”!

Diagnosis

When considering evidence-based practice, we sometimes forget the word, “practice,” and substitute “products.”

When examining evidence with a critical eye, some basic statistical techniques are helpful. I say this to clinicians frequently, “If you change five things and study it for 60-days, then determine an outcome, how do you know which of your five things influenced the results? Or, was it a combination of more than one of those five?” When performing clinical evaluations on products (I try not to let clinicians call them trials, trials get published…evaluations don’t), I encourage our clinical experts to focus on one variable and hold the rest as controls. This rigidity doesn’t mean the evaluation can’t be flexible. It may be apparent only a few days in that things are trending the wrong way. It’s okay to say, “Stop!” Change something else and see what happens. Controlling for variability is an important aspect of ensuring accurate conclusions.

Here’s a simple example that I’ll return to later: My hospital purchases a dozen different products to help reduce CLABSIs. How much sense does it make to change three of them at the same time and try to draw any conclusions from the resulting increase or decrease (or consistency) in our infection rates? One thing at a time. If we want to evaluate a new central line dressing, do that. If we want to evaluate a different needleless connector, do that too…just not at the same time. If we want to see the value of alcohol-based caps attached to unused central line ports, okay…but that’s a separate issue. If time is of the essence, then do each evaluation on separate units and watch the trending. This isn’t ideal, I’d much rather have the same caregivers (or at least the same unit) involved with all aspects of trying to assess the value of products designed for one purpose (reducing CLABSIs), but we are often engaged in Rapid Cycle Change (another buzz-phrase) and we just can’t wait!

Planning

I’d like to set a goal for future conversation by looking back at the beginning of this article. I think we’re focusing on the wrong word. Instead of evidence-based practice…let’s call it evidence-based PRACTICE! As one of my favorite professional basketball players once lamented, “We’re talking about practice[3]!” Below, under “Implementation” are a couple of examples of what I mean.

I’d like to set a goal for future conversation by looking back at the beginning of this article. I think we’re focusing on the wrong word. Instead of evidence-based practice…let’s call it evidence-based PRACTICE! As one of my favorite professional basketball players once lamented, “We’re talking about practice[3]!” Below, under “Implementation” are a couple of examples of what I mean.

Implementation

In LEAN terminology, “Going to the Gemba[4],” is a strategy where you physically go to where the actual work is being performed…and watch. It’s a great opportunity to check yourself. Is what you think is happening really happening? Do practitioners follow protocols only when someone is looking? Is there a Hawthorne[5] effect present when change occurs? Hawthorne is actually quite interesting in terms of Value Analysis. It is named for a study in which factory employees’ output was compared using different environmental factors (low lighting vs. bright lights, etc.). The Hawthorne effect is that the different factors didn’t improve the employees’ performance nearly so much as the fact that someone was paying attention to them. In short, when you make a change in one area, people tend to focus on the whole operation for a time, but it is usually short-lived. You are less likely to leave your clothes on your bedroom floor just after you’ve cleaned your house. In Value Analysis, you will see improvement in overall clinician compliance with a protocol right after you change to a new product that requires comprehensive education on it. In fact, improvement will occur in areas very unrelated to the product in question.

Example 1: I went to a few clinical areas and hung around for a while. My peers call this “making rounds.” I don’t make rounds. I hang out. In one area, while gently prodding my customers for ideas that could improve their work lives, I stumbled upon an “aha” moment. Actually, I didn’t stumble, a nurse slapped me with it. Here’s how our conversation went:

Nurse: Let me explain to you how some CLABSIs might occur.

Me: Okay, go for it.

Nurse: Okay, when I change a central line dressing, sometimes, I don’t wash my hands.

Me: What? Are you nuts?

Nurse: Here’s what happens. I put on a pair of gloves, peel off the old dressing, take off my dirty gloves and put on clean gloves before cleaning the site and placing a new dressing. I don’t wash my hands between the dirty and clean gloves.

Me: Why not?

Nurse: Because the sink is nowhere near my patient’s central line! And I’ve just uncovered it and left it open and vulnerable. I don’t want my patient reaching their fingers up and contaminating the site while I’m 8 feet away washing my hands.

Me: So use alcohol sanitizer.

Nurse: That’s 10 feet away, even farther than the sink.

Me: Well then…

Nurse: Yes?

Me: Maybe we should put a little bottle of sanitizer in our kits, on top of the gloves, so you almost have to use it before donning the clean gloves.

Nurse: Now you’re paying attention!

This is exactly how we got alcohol sanitizer into our CL dressing change kits. Before making the change, I asked several nurses to walk me through the scenario painted by this wonderful bedside nurse (with several years of experience) and each one was convinced that handwashing wasn’t a problem, until they got to the part about donning the clean gloves right after handling a dirty dressing. They didn’t see the issue until it was pointed out. I didn’t either. This wonderful nurse changed not only her practice, but the practice of our entire facility. How cool is that? We didn’t change (or add yet another) product…we changed our practice. Many of my best ideas come from the bedside, just like this one.

Example 2: One of my favorite Nurse Managers spent some time spying on her staff. She wouldn’t use that term, but it’s what she did. While on her unit working, she’d make a special effort to pay attention to something most of us ignore. One week she’d focus on the noise level of her unit. Another week she’d focus on the conversations she (and therefore the patients and their families) could hear. Another time she’d watch how often a “handwashing encounter” (another buzz-phrase) would occur and be skipped.

Example 2: One of my favorite Nurse Managers spent some time spying on her staff. She wouldn’t use that term, but it’s what she did. While on her unit working, she’d make a special effort to pay attention to something most of us ignore. One week she’d focus on the noise level of her unit. Another week she’d focus on the conversations she (and therefore the patients and their families) could hear. Another time she’d watch how often a “handwashing encounter” (another buzz-phrase) would occur and be skipped.

Her tactic took some courage. She had to resist the urge to correct what she saw immediately (and therefore let her folks know what she was really doing), unless it was something egregious. In short, she examined her staff’s practice with a critical eye for detail. How’s that for evidence? It might not be citable as a reference, but that’s only because it’s actually an observed behavior, not just one that was read in a journal. Objective occurrences, rather than subjective articles, can be very powerful. I’m not discounting the importance of journal articles…I’m writing one! I’m simply pointing out how impactful “local” data can be. What this nurse leader learned was important. What people think they do may not actually be what they do.

In her later interactions with her staff, many of them would firmly deny that what she’d seen had actually occurred. Statements like, “I always wash my hands before leaving a patient’s room,” were common, even though the manager had seen it with her own eyes. Her tactic was excellent, I thought. She’d send these folks away with the task of watching one of their peers for the next shift and trying to spot an “encounter” that didn’t go as planned. Each of them would return with evidence of someone else breaking a protocol without realizing it. Some of them would admit that perhaps they could be guilty of the same thing. There was nothing punitive about this. It was simply performed as an education exercise, for all involved. It worked.

Clinical staff began pointing out these occurrences to each other, not as a “gotcha”, but as, “I bet you think you washed your hands before coming out of that room, but you didn’t.” And, “Did you know that your voice carries all the way over here? I could hear you talking about your patient’s test results. If I could hear it, so could other patients.” By “monitoring” each other, they tightened up their own ship. How great is that? It’s too soon to tell if this has resulted in sustainable improvement in things like HCAHPS results, or HACs, (remember Hawthorne?), but we’ll be watching the data!

Evaluation

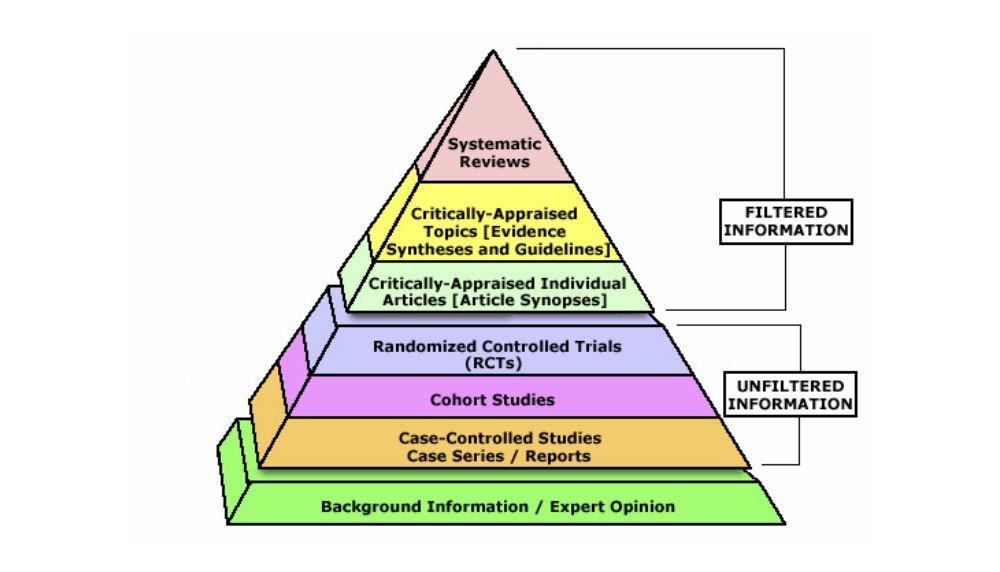

When engaging in evidence-based practice, evaluating the quality of that evidence is always a good idea. I think most of us are now used to searching PubMed for articles and trying to determine if the authors had anything to disclose related to gifts from the companies involved in the studies they wrote about. I think we’ve all seen the evidence pyramid in Figure 1. Personally, I’ve had it shown to me on more than one occasion to show me how much weight my “expert” opinion carries. This isn’t to say we shouldn’t evaluate the evidence we use to support our practice. Quite the opposite. I’m a proponent of full transparency when it comes to evidence. Did you know that as a result of the Patient Accountability and Affordability Care Act, you can read a clinical study authored by a physician extolling the benefits of product (or medication) X and look that physician up on the Open Payments website to see if they’ve received money (transfers of value) from the makers of product (or medication) X? This level of transparency has traditionally been difficult to uncover. Now, it’s right out there in the open, on a publicly searchable website. I tell our doctors that their patients can search them…and will!

Figure 1 [6]

Whenever I receive a request for a new product from a physician, one of my steps is to see if there could be any appearance of impropriety by having significant dollars exchange hands (from the company to the physician) and if the physician has listed the transaction on their conflict of interest statement. Transparency is my favorite word!

However, I am also an advocate of not solving clinician practice problems with new products. As my nurse manager friend learned, new products aren’t the answer for poor handwashing. Earplugs may be of some benefit for some patients, but clinicians can also talk in more appropriate tones (and not violate patients’ confidentiality while they’re at it!).

Learning what clinicians actually do, not what they think they do, and not what they say they do, can have an extraordinary impact on outcomes. Not just clinical outcomes, but financial outcomes as well. Even patient satisfaction can improve when we all pay attention to our practice. So remember, next time you hear, “At our hospital, we follow evidence-based practice,” hear an emphasis on the word practice. Question what you’re hearing, not just in terms of published peer-reviewed journal articles, but also in terms of the power of first-hand objective observations. You may be surprised at what you find.

Conclusion

You may have noticed that I used the Nursing Process as an outline for this article. Why would I do that? Because, in its own way, it’s evidence-based practice. There’s nothing wrong with using “old” techniques and terminology, when they’ve been shown to be effective. The nursing process is a great example of a well-used template for project management that has stood the test of time. I’d call it evidence AND practice.

[6] http://www.students4bestevidence.net/the-evidence-based-medicine-pyramid/